A community-led platform driven by a collective of proven investors. We distill market noise into clear, credible and actionable ideas, giving you the confidence to invest.

We live in an era of information overload. It is harder than ever to know what is worth paying attention to and who to trust. It’s time for a new approach.

Community

No gurus, just the collective intelligence of some of the smartest investors in the game. Our expert community represents >$250b AUM. Together, we identify opportunities that no individual could spot alone.

Join NowClarity

Our platform curates market noise into concise, actionable investment insights, making complex ideas easy to understand and simple to act upon.

Join Now

Confidence

With curated insights from our expert community, you can discover alpha generating ideas, giving you confidence to invest with conviction.

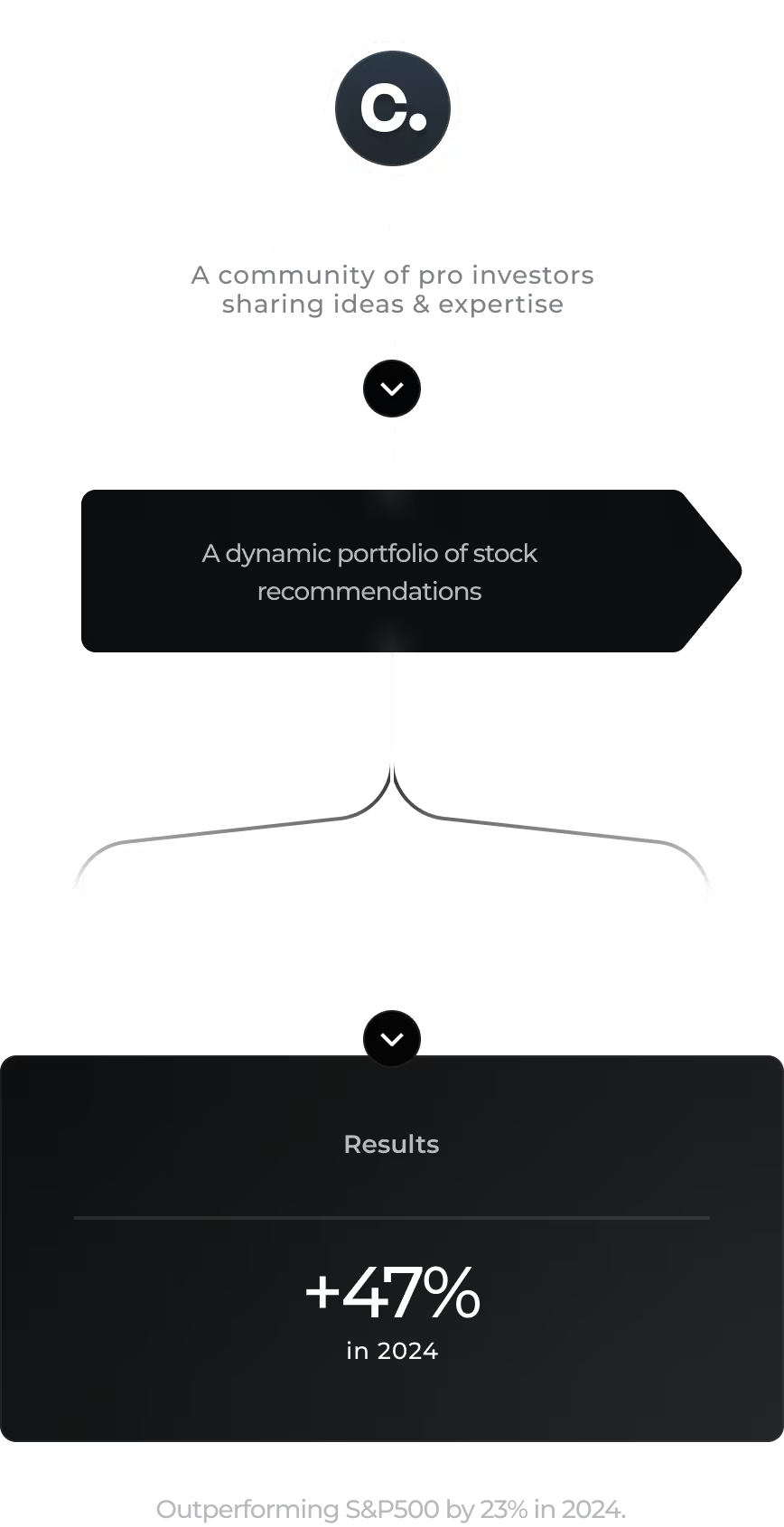

Join NowOur alpha is crowd sourced from the collective intelligence of our pro investor community. A method that has proven to deliver superior investment returns.

Rachid B

“My biggest motivation is really the idea generation - the actionable ideas. It’s always good to be part of this conversation and to see what people are thinking”.

Tagg M

“I’ve made my money back 12 times over the cost of membership because of the ideas and alpha Curation generates.”

Richard Giroux, CFO

“Curation has successfully supported our retail investor strategy on an ongoing basis by communicating our specialist equity story to a generalist investor audience, at scale. This has helped raise significant investor awareness around MeiraGTx’s mission of harnessing the potential of geneticmedicines.”

Dip your toe in and see if Curation’s trusted insights are a good fit for you.

Access our full library of stocks

Weekly thematic insights from the Curation Collective

Join the Curation Collective, an incredible community of expert investors sharing ideas.

Get the curated, actionable investment ideas

Corporate access ‘on-demand’

Flagship in-person events

Exclusive members benefits

30 day free trial, cancel during the trial period at anytime.

Give your team an edge by offering them a seat at the table within our community.

Fully compliant research service

Curated investment briefings delivered daily or weekly via the terminal

Dip your toe in and see if Curation’s trusted insights are a good fit for you.

Access our full library of stocks

Weekly thematic insights from the Curation Collective

Join the Curation Collective, an incredible community of expert investors sharing ideas.

Get the curated, actionable investment ideas

Corporate access ‘on-demand’

Flagship in-person events

Exclusive members benefits

30 day free trial, cancel during the trial period at anytime.

Give your team an edge by offering them a seat at the table within our community.

Fully compliant research service

Curated investment briefings delivered daily or weekly via the terminal

Is Curation’s platform free?

Yes. You can access our platform completely free by creating an account. Our mission is to level the playing field for retail investors, so there will always be a freemium option to help you make informed investment decisions, now and forever.

What is the Curation Collective?

The Curation Collective is our community of professional investors representing more than $250bn AUM. It is our unique source of expertise and ideas, which gives us the confidence to invest with conviction. Joining requires a paid subscription, which provides access to our real-time community feed, actionable investment recommendations, private deal flow, networking, and more. Subscription to the Collective is open to all, but we recommend it most suitable for Sophisticated investors.

How do you make money?

Our primary commercial model is charging corporates to be showcased on the platform. Since corporates are looking for new investors, we believe the cost should sit with them, not retail investors. We make it clear which corporates have a commercial relationship with Curation, both on the platform and in our disclaimers. At the same time, we ensure content is as independent as possible, showing both bull and bear cases as well as key risks. We believe that Corporates with an active retail investor strategy should be recognized and championed, as they recognise the vital role that retail investors play in equity capital markets today.

What is Curation Connect?

Curation Connect is our investor relations and communications division, designed to help corporates engage with retail investors. You can learn more on our Corporates page or by contacting Calum Marris (calum.marris@curationcorp.com), Head of Equity Sales.

Is Curation regulated?

Certain activities of the Curation Collective (trading name of Fat Gladiator Limited) are regulated by the FCA. Fat Gladiator Limited is an appointed representative of Laven Advisors LLP, which is authorised and regulated by the Financial Conduct Authority.